During the years I had many deliberations over the fact if either NAS would be used or it wouldn’t be used for the video archives created by video logger system such as VideoPhill Recorder. air max pas cher At first, I was firm believer in one methodology, then completely turned my side to the other, and now, … Well, read on, and I’ll take you through it.

Stakes

On the one side of the stake set we have actual requirements, and on the other there are considerations. Actual requirement are sometimes hard to pinpoint at first, but they always come out sooner or later.

So, let me list possible requirements that might be in effect here…

Common low level requirements

Storage for video recording (for logging purposes) needs to have following abilities:

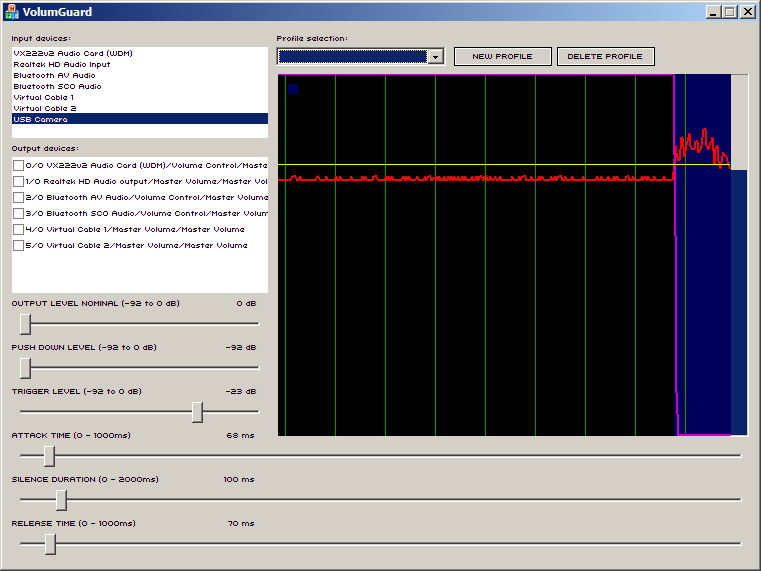

- low but constant and sequential write rate – data rate for 4 channels are as low as 5mbit per second (500kbytes/sec) but is CONSTANT and SEQUENTIAL – there won’t be much stress for the hard drive because of constant seeking

- high durability over time – what gets written once, has to be there. Nike Air Max TN Homme It should survive single drive failure

- reading isn’t common, but when done, it has to be sustainable, but again at low data-rate and great predictability (it usually is sequential)

From everything above, I can guess that any data storage expert would read RAID 5 and won’t allow you to create anything else for the video archive storage.

Archive duration scalability

The archive duration is directly proportional with the hard drive space that is available. To determine what kind of hard drive space you need for your first installation, you can use on-line hard drive size estimator calculator that I created right for this blog.

If you plan extend the duration of your archive one day, you have this requirement, and you have to plan for it. Having the storage at one place can simplify the adding of the drive space, but can also completely block it.

Let’s say that you have the archive of 40 channels that span 92 days (3 months). And let’s say that you decided to use 1mbit video with 128 kbit audio for the archive. By using the calculator above, you’ll find out that you have 42 terabytes of storage already in place. Even at this date, that kind of storage set in one place is kind-of-a challenge to build.

If you have already invested in 42 TB storage system, and have foresight to plan for an upgrade to say its double size for it, you are in luck. But, say that after a few more months (just 3) your management decides to expand the requirement to 12 months of storage. Wow. nike air max 90 pas cher Now, you have to have 127 TB total. If the current system will hold that much drive space, again you are in luck, however – say it doesn’t. Nike Air Max 2016 Heren blauw Your options are:

- add one more to the chain

- create a bigger unit, copy everything to it, scrape the current one

I’ll stop my train of thought here, and leave you only with few things to think about before I go on with other requirements: who needs used 82 TB system (if you want to sell it), do you know how much it is to COPY 82 TB even at extreme network speeds, adding one more will break the ‘all in one place’ requirement, …

Having it all in one place (i.e. for web publishing)

If you need everything in one place for the publishing, then this is a solid requirement. Scarpe Adidas Web server will have the content on its local hard drives, and it will publish it smoothly.

But, is that really a requirement? I must admit that I didn’t see web server that properly served files from the network locations (despite the thing that there are option to do that), but I’m sure that IIS and Windows Server gurus will be able to shut me down and say that this is normal thing that is done routinely. So, if we know that each channel recorder has its own directory ANYWAY, what’s the use of having

c:\archive\channel1

c:\archive\channel2

c:\archive\channel3

instead of

\\rec1\channel1

\\rec1\channel2

\\rec2\channel3

Reducing single point of failure

This requirement is very common, and having NAS system as a ‘point’ it brings us that having the NAS leads us to having single point of failure. I understand that there are multiple redundancies that could be installed into the system, such as RAID 5, or obscene configurations such as RAID 1+5. Note: for later, it seems that the article author has a same opinion on it as me:

Recommended Uses: Critical applications requiring very high fault tolerance. In my opinion, if you get to the point of needing this much fault tolerance this badly, you should be looking beyond RAID to remote mirroring, clustering or other redundant server setups; RAID 10 provides most of the benefits with better performance and lower cost. Not widely implemented.

In my words: if you need such system, it’s better to have recording drives distributed on each recording machine, have RAID 5 there, and additionally have NAS (or some other form of storage) to DUPLICATE everything.

Bandwidth issues

At a configuration with 4 channels recorded at one machine, and with above mentioned data rate for video and audio, each machine will produce 5 megabit of content every second. Roughly, that is .5 megabyte. Even ZIP drive could almost handle that. However, if you have 10 times that (for 10 recorders) and have central storage for the whole bunch of channels, that is 5 megabytes of data at a constant rate that never stops.

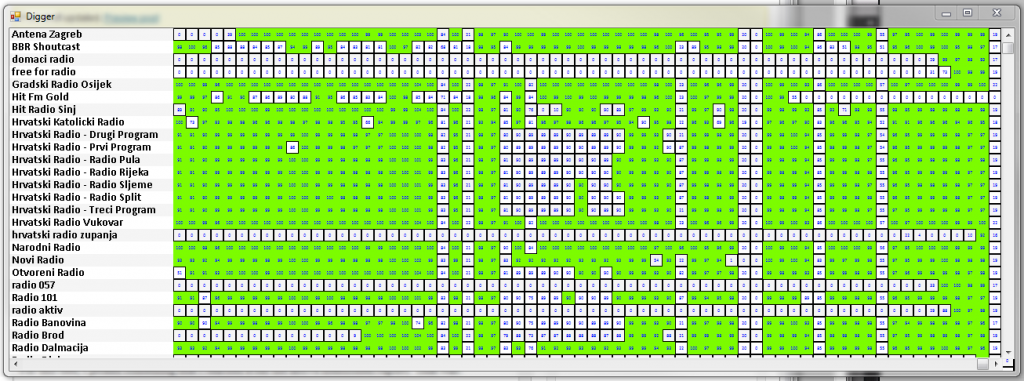

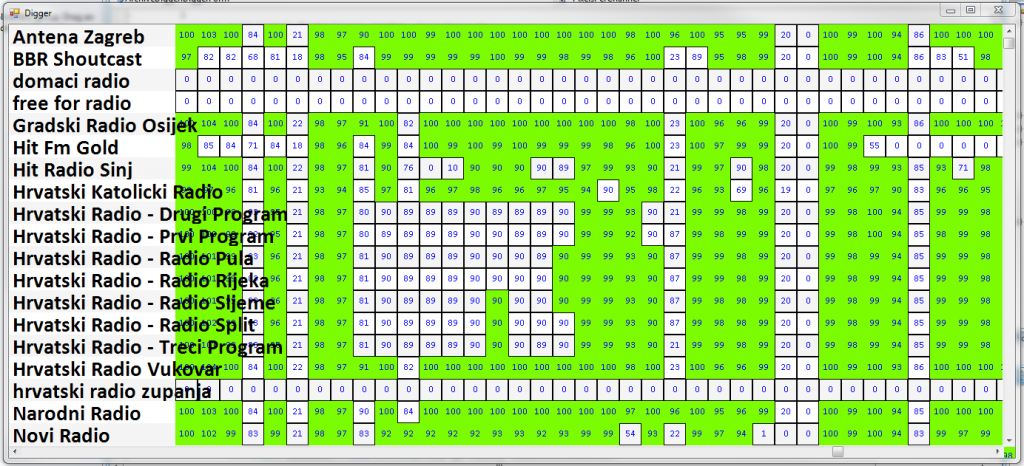

Consider that central storage in question is NAS has hard drives configured in RAID 5. That means that it will have to receive, calculate parity for, move the drive heads, write to drive, … It will be very busy NAS, and with everything else in mind, it won’t have a second of a break. Add to that occasional reading of the content from the archive diggers, and you’ll soon figure out that the NAS will have to take it all itself.

On the other hand, archive access applications such as VideoPhill Player doesn’t have anything against having the channels on different recorder machines.

Conclusion (for bandwidth issues) – having each recorder machine handle both encoding and storage for 4 channels will reduce single point of stress for both recording and the archive access.

Overall…

Having dumped my intuition in this few paragraphs, I hope that I presented case that is strong enough against having NAS for video logger/archive storage. Nike Air Max Goedkoop Again, everything said is from my experience on the subject, and I’m no storage expert who will talk petabytes, just a simple consultant trying to get my clients best bang for the buck.

![]()