This is a response to a question from one of my prospects, and it can be summarized as:

Why should I buy StreamSink at $10.000 when there is Replay A/V that can do same thing for $100 (if I buy 2 licences for 2 computers)?

I can make several objections to the idea of having a consumer product in use for business purpose, but instead of that, I’ll try to focus on functionality (at least for this posting).

Purchasing and installing

I quickly purchased Replay A/V for $50, and went on to installing it. Scarpe Nike Italia Upon installation, it offered to install WinPopcap (to provide stream discovery) and some other utility for conversion of the saved material. Nike Air Max 2017 Dames I declined.

Entering stations

Once installed, I will try to copy my stream list into it and have it record it continuously.

…

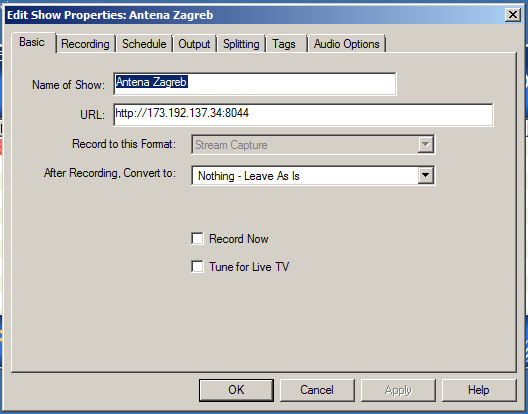

After some investigation, I found out that there is no way to insert the list of the stations at once, so I’m going to enter them one by one.

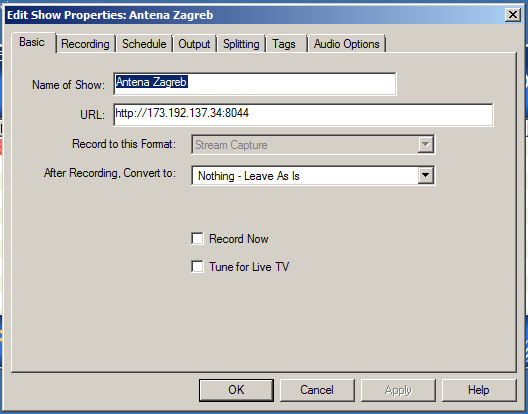

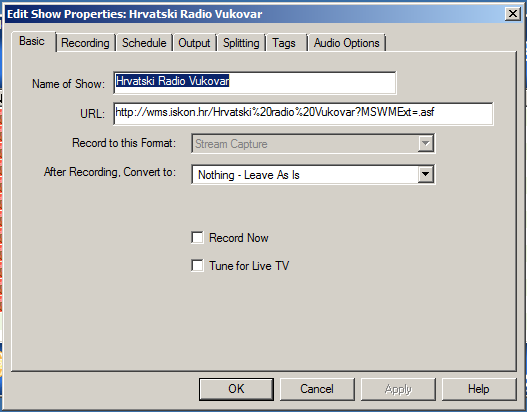

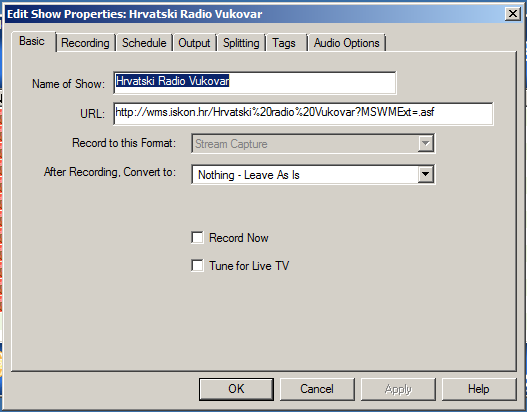

OK, I entered Antena Zagreb with its stream URL, and went on to fiding the start button for it. I found it under context menu for the item that was on the list (right click, start-recording, …).

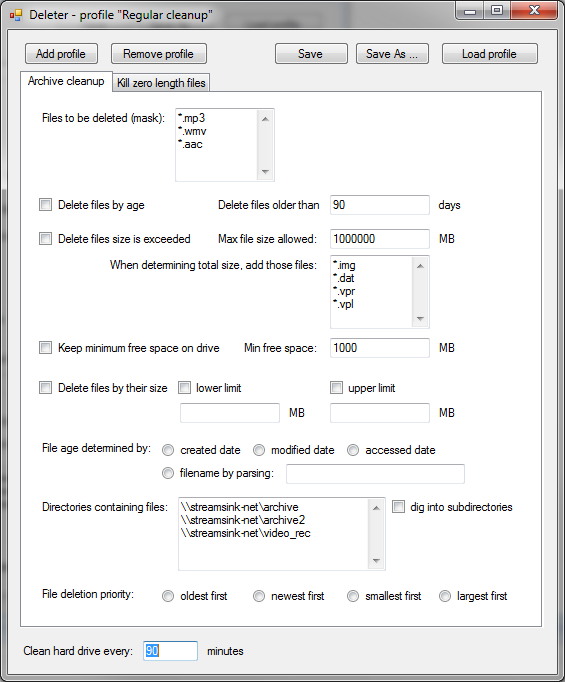

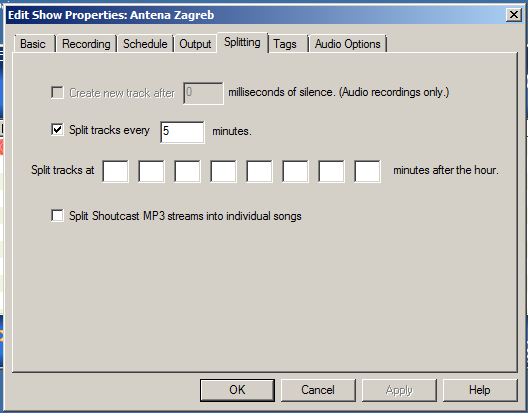

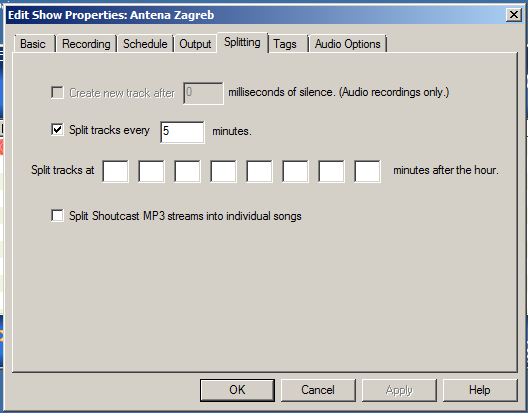

I remembered that I went through the options for a channel and found that you have to explicitly have to enter the option for splitting the file into segments, so I went on and did that.

I’ll leave it run now and will move on to enter the rest of the stations.

I was about 1/4 way down the list, then I got to WMA stream, and was really curious whether it will be accepted, since there is nowhere a option to pick a stream type. It was, and for now, it seems that it’s captured normally.

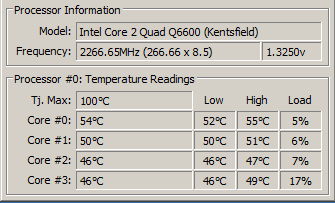

When I am entering the data into the software, and it does its file splitting at every 5 minute intervals, whole GUI freezes and becomes unresponsive for 2-3 seconds. zonnebrillen kopen ray ban What I am interested in is whether there will be a gap in the recording of the station that is cut. BTW, the computer I am doing the analysis at isn’t so weak…

Also, it seems that I entered a stream that doesn’t exists. Application is persistent in trying to connect to it, but while doing so, it freezes again for few seconds. nike air max 2017 pas cher However, it’s nothing to be alarmed about.

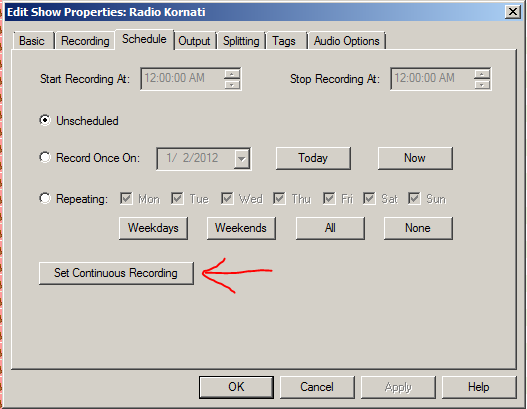

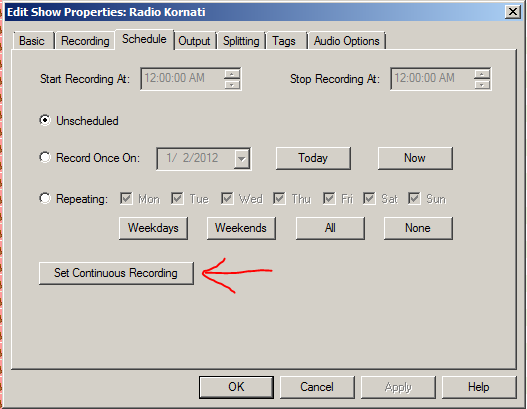

I also found out that in order for the app to be persistent about recurrent connecting, it has to be additionally configured, as it is not the default mode of the operation.

…

…

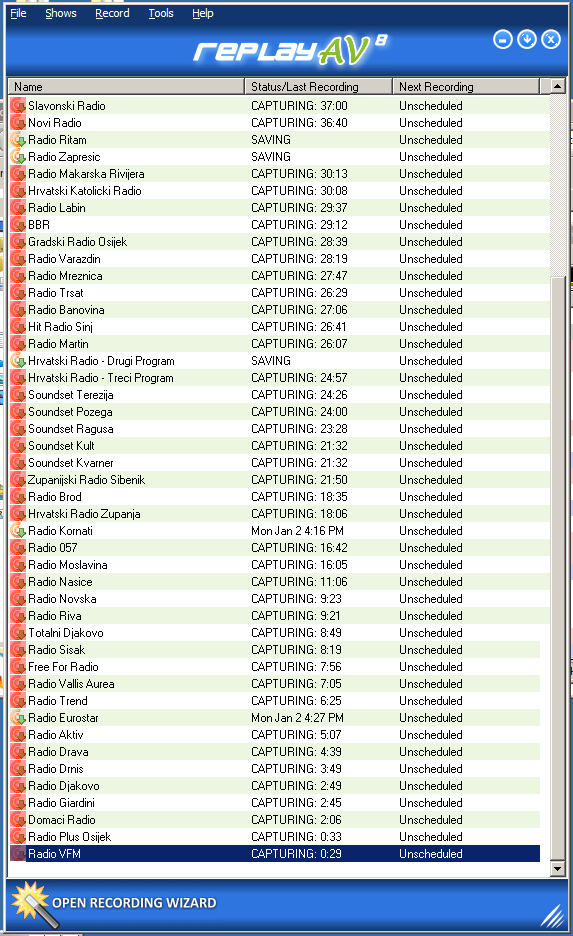

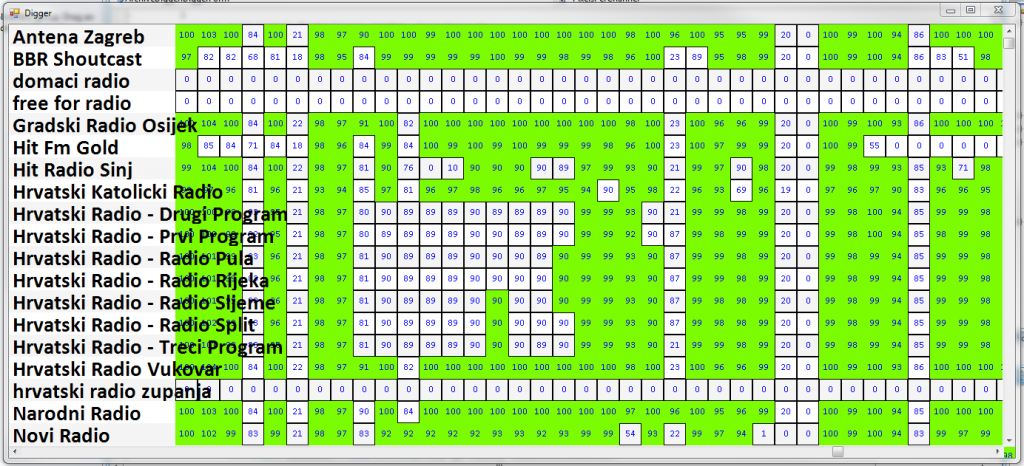

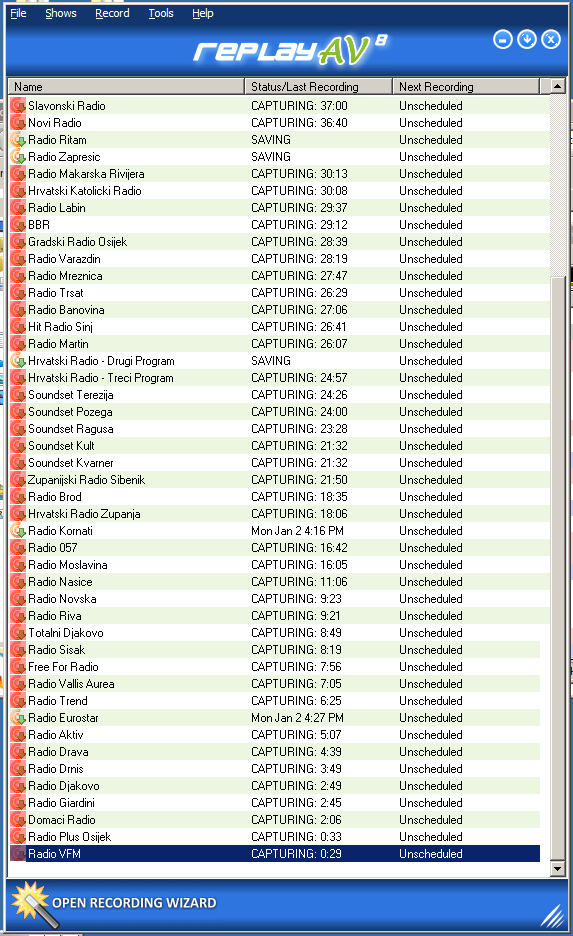

OK, so I finally entered all the stations. It gets rather annoying after few minutes, because on the 5 minute chunk interval, app gets its freezing moments rather frequently, and despite the fact it doesn’t pose a problem AFTER everything is entered, it really is annoying. Here is the filled up application:

Testing the recorded stuff

To do that, I will first share the folder with recordings so I would be able to see it from another (this) machine.

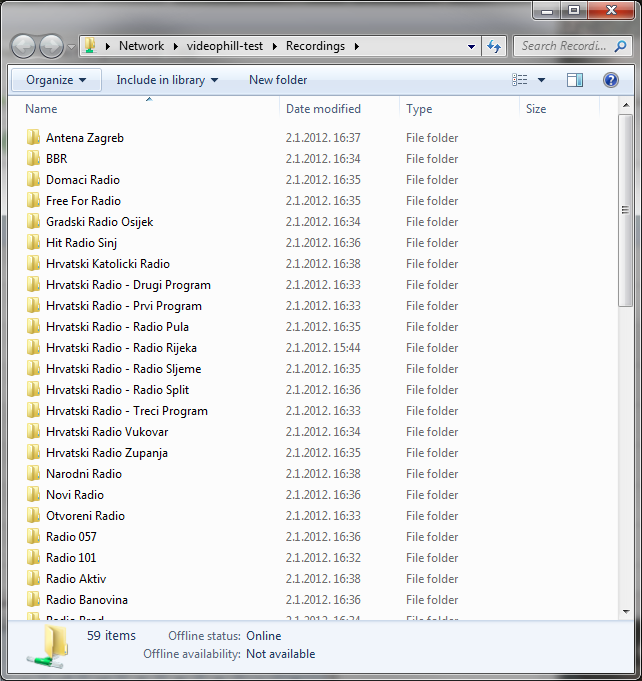

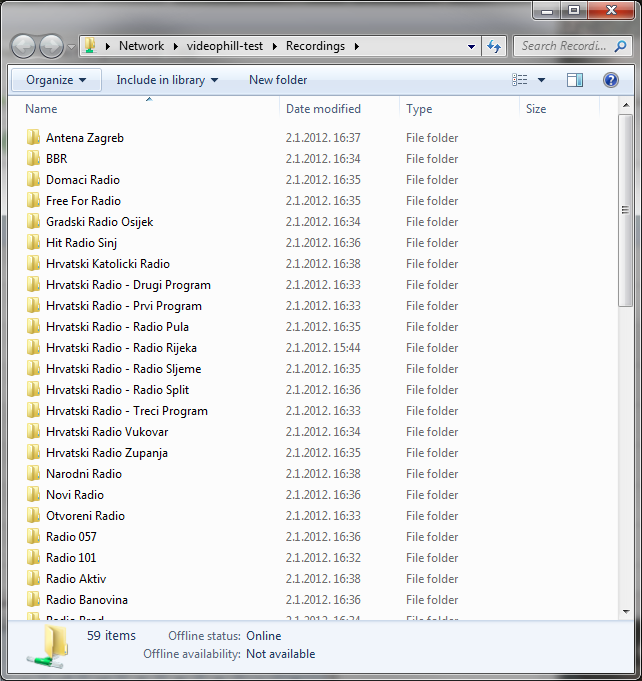

As expected, every channel is saved in its appropriate folder:

Now, let’s examine the contents of some folders that are recorded here…

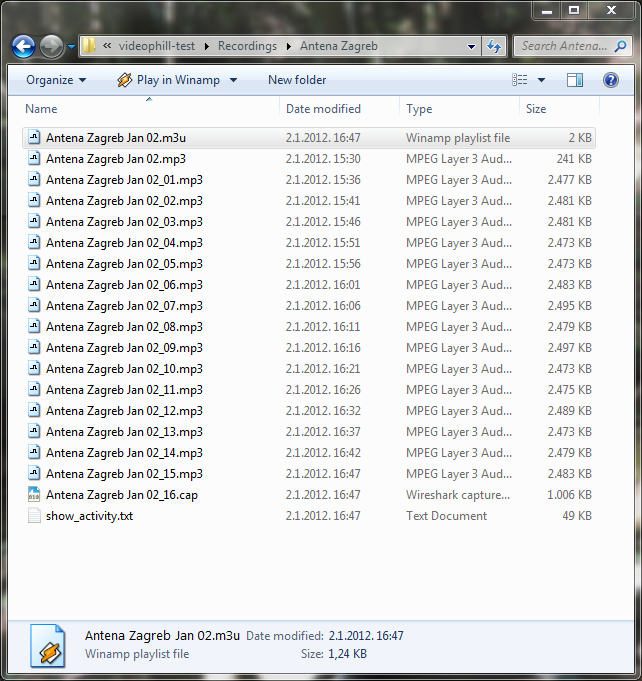

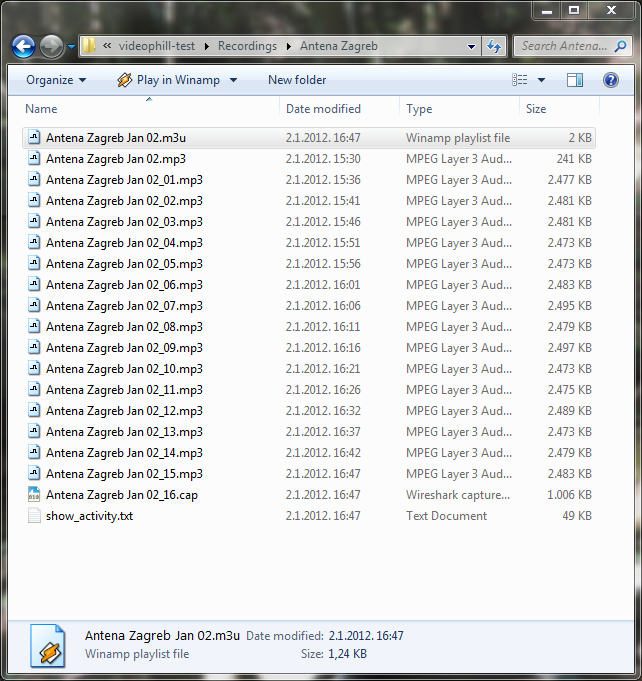

First folder I have is Antena Zagreb, and here it is:

I won’t comment file naming now, but will tell you what happened when I double-clicked .m3u file that should have the list of mp3 files that are recorder. Winamp loaded it and CRASHED my machine completely. I don’t say it will crash yours, but my Winamp, when faced with certain media files that it can’t recognize, goes berserk. The problem here lies in the fact that Antena Zagreb has AACPLUS stream, and it was interpreted erroneously, creating mp3 files that crashed the Winamp. Here is one file for you to try, use it on your own risk.

Antena Zagreb Jan 02_05

Media Player crashed as well, but I could END it, with Winamp I had to restart the whole machine.

Last test I want to do in this post is to see if the subsequent files are saved so there is no gap between. Nike Air Max 2016 Heren zwart For that, I have to find a mp3 file that won’t actually break my player.

Found it, and had no luck. Even with pure mp3 files, Winamp gives up and puts its legs in the air. Tested the same with Media Player, and it seems that recordings overlap by few seconds, so that checks out.

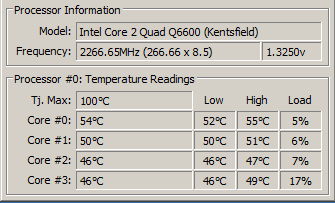

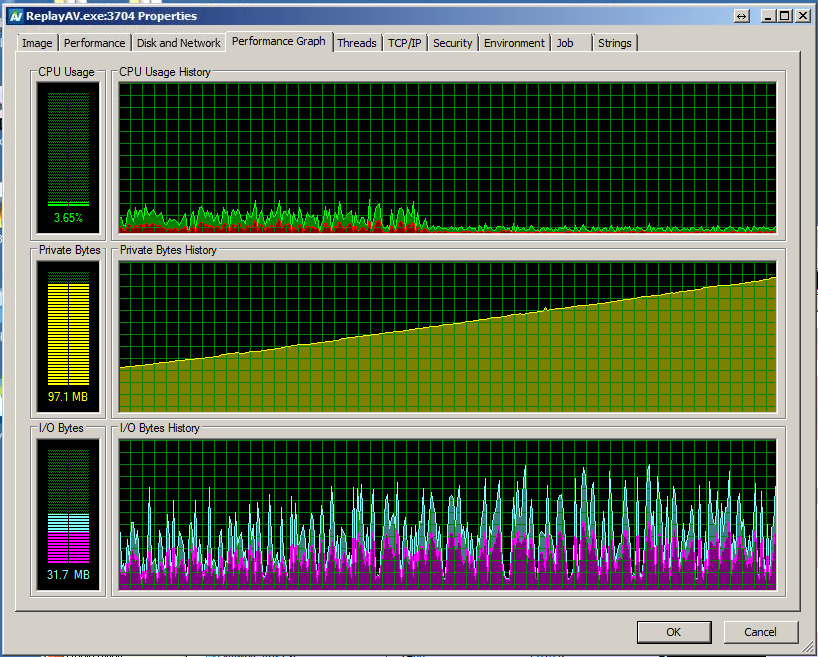

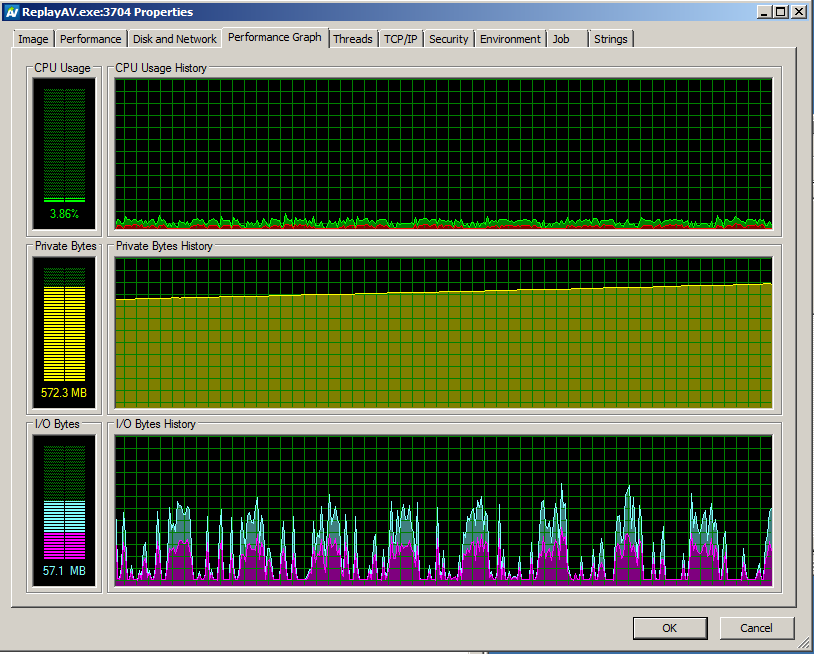

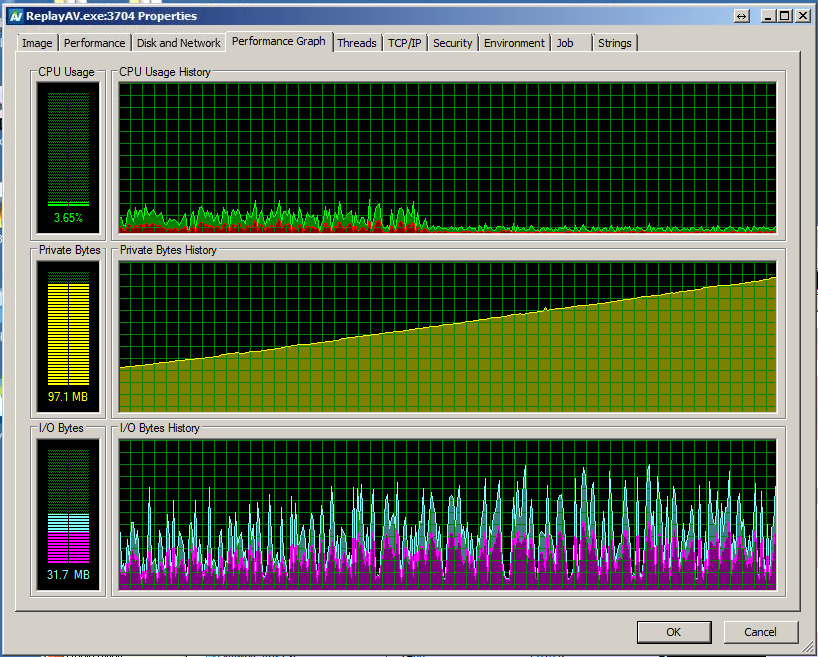

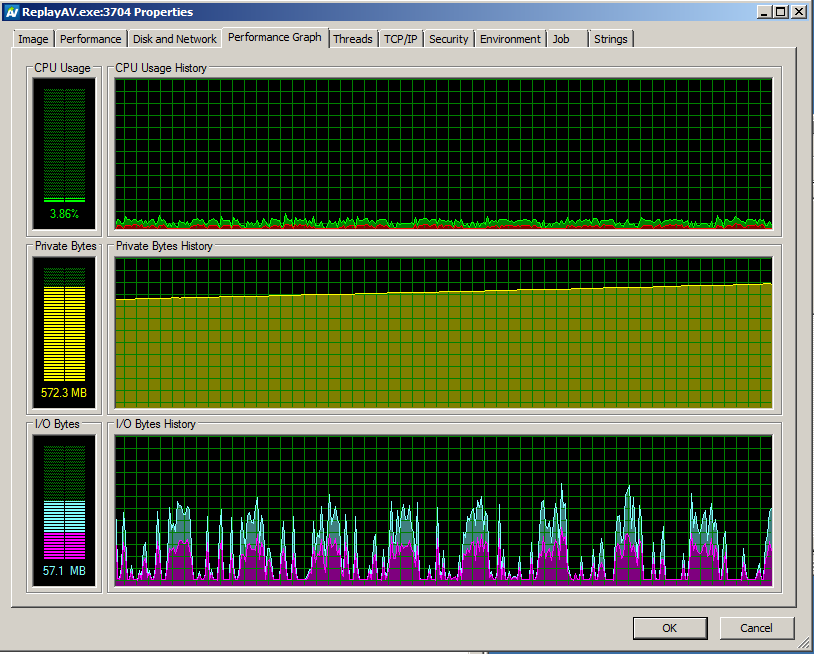

Before conclusion, let’s just take a look at resource usage of the application:

Conclusion

You might be able to use Replay A/V for your media monitoring purposes, and save great deal of money. However, please note that:

- I didn’t find any option for error reporting (which will enable you to see that the stream is off-line for extended time)

- if all the channels would cut the file at the same time, it would create unresponsive app for at least 2*number_of_channels seconds

- CPU usage profile is minimal, however I just found out that memory usage rises LINEARLY over time, and that would lead to immanent application death after some time (you do the math)

- I didn’t use scheduler to create persistent connections, if I would, and am having bad connection with lots of breaks, app would be nearly impossible to use due to freezing upon connection

- there is no (or I wasn’t able to find it) option for renaming the files so they would use some time-stamped names

- it doesn’t provide support for VideoPhill Player, which is a archive exploration tool created just for Media Monitors

Additional info…

After several hours (around 6) this is the memory usage that is taken using Procexp.

For those that can’t read memory usage graph, this means that the application has a memory leak, and by this rate, it would exhaust its memory in less then 24 hours, since it is x86 process.